AIGNE Hub: The Next Evolution of AI Kit#

AIGNE Hub is the upgraded, reimagined evolution of AI Kit—your all‑in‑one gateway for building, managing, and scaling applications powered by Large Language Models (LLMs). It delivers:

- A single API to access any major model

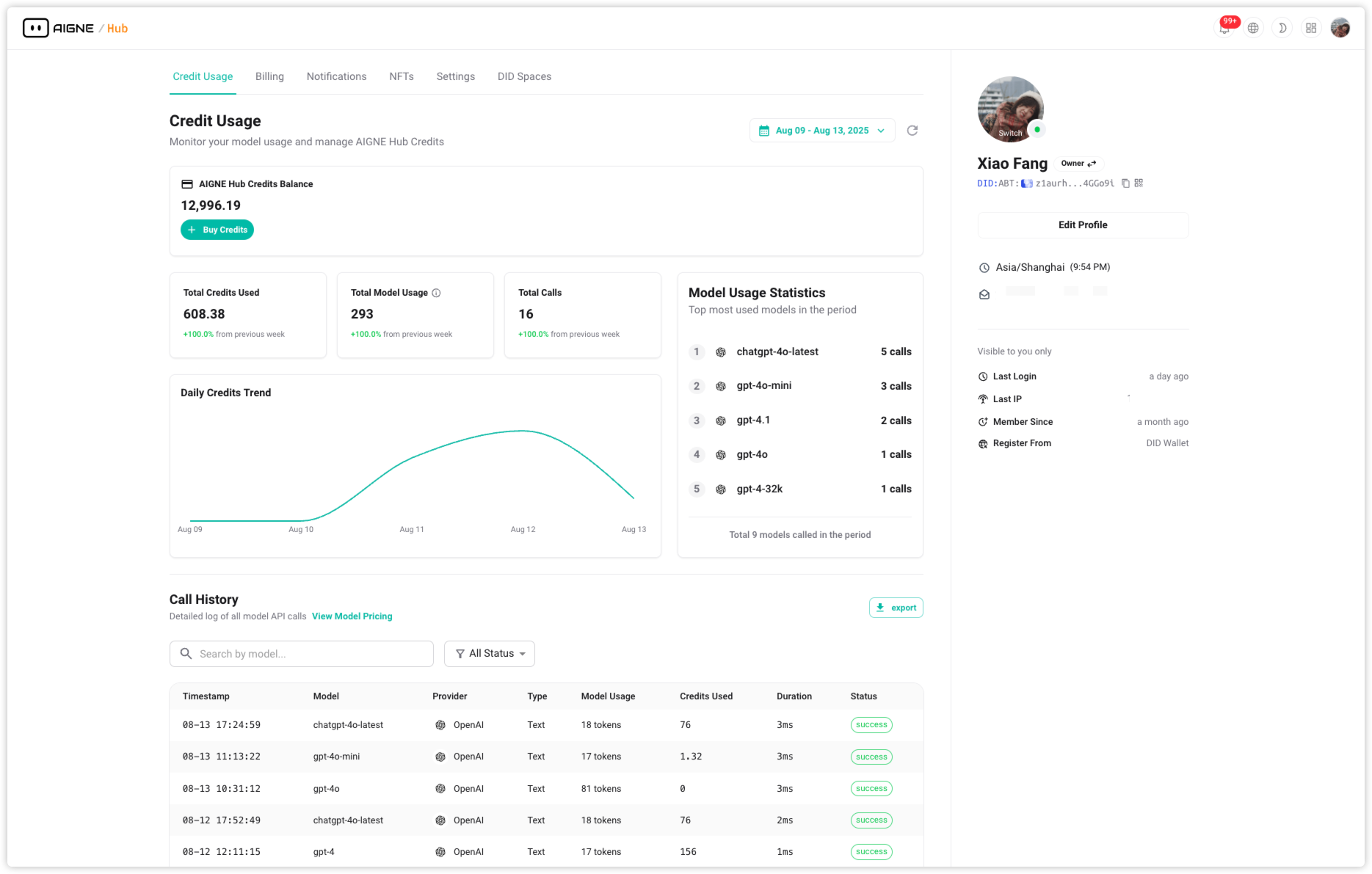

- A centralized dashboard to track costs, performance, and security

- Built‑in observability and analytics tools

- An integrated credit‑based billing system

With AIGNE Hub, you can scale AI features, monitor usage, and even monetize them—without juggling multiple systems.

The Central Hub of the AIGNE Ecosystem#

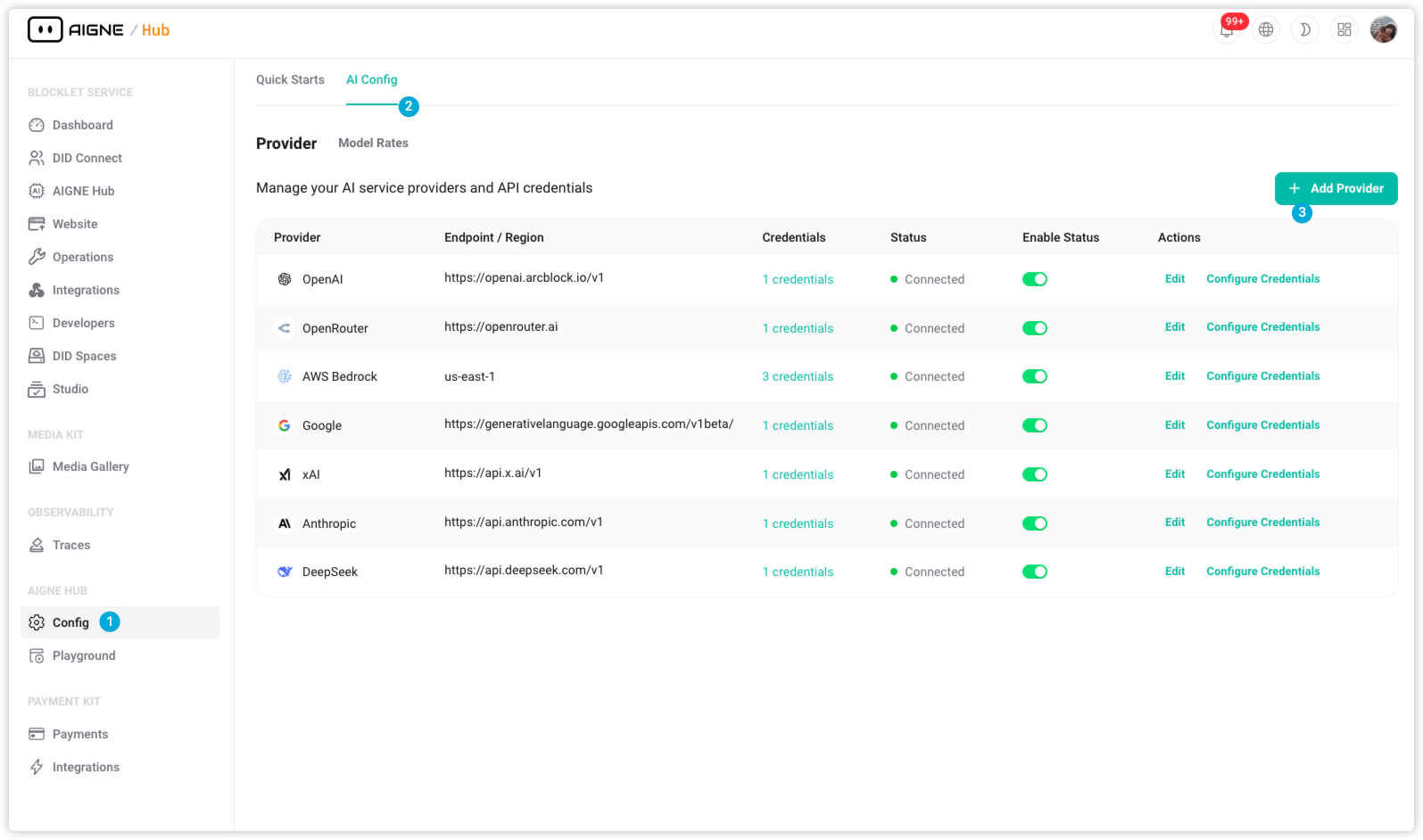

AIGNE Hub is the unified entry point for multiple LLM and AIGC service providers. Out of the box, it includes:

- OAuth authorization

- Credit‑based billing

- Usage analytics

This allows teams to launch AI‑driven apps without managing separate keys, dashboards, or billing platforms.

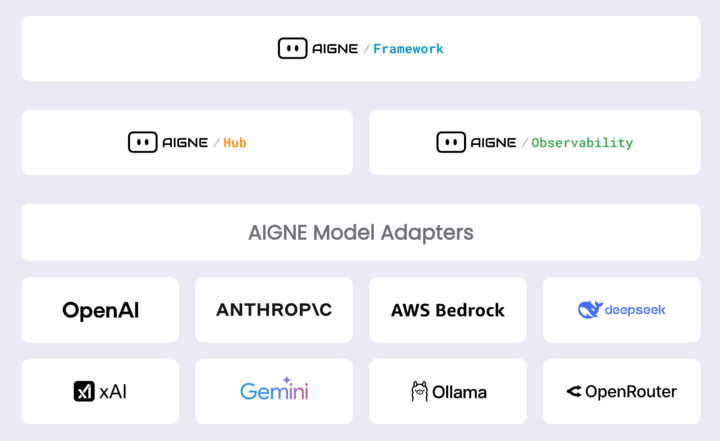

AIGNE Architecture Diagram#

Key Upgrades#

Personal Mode vs Enterprise Mode#

Whether you’re an independent developer or managing a multi‑tenant AI platform, AIGNE Hub adapts:

- Personal Mode (default) – Ideal for local development with direct provider access. Self‑host, use your own API keys, and skip billing setup.

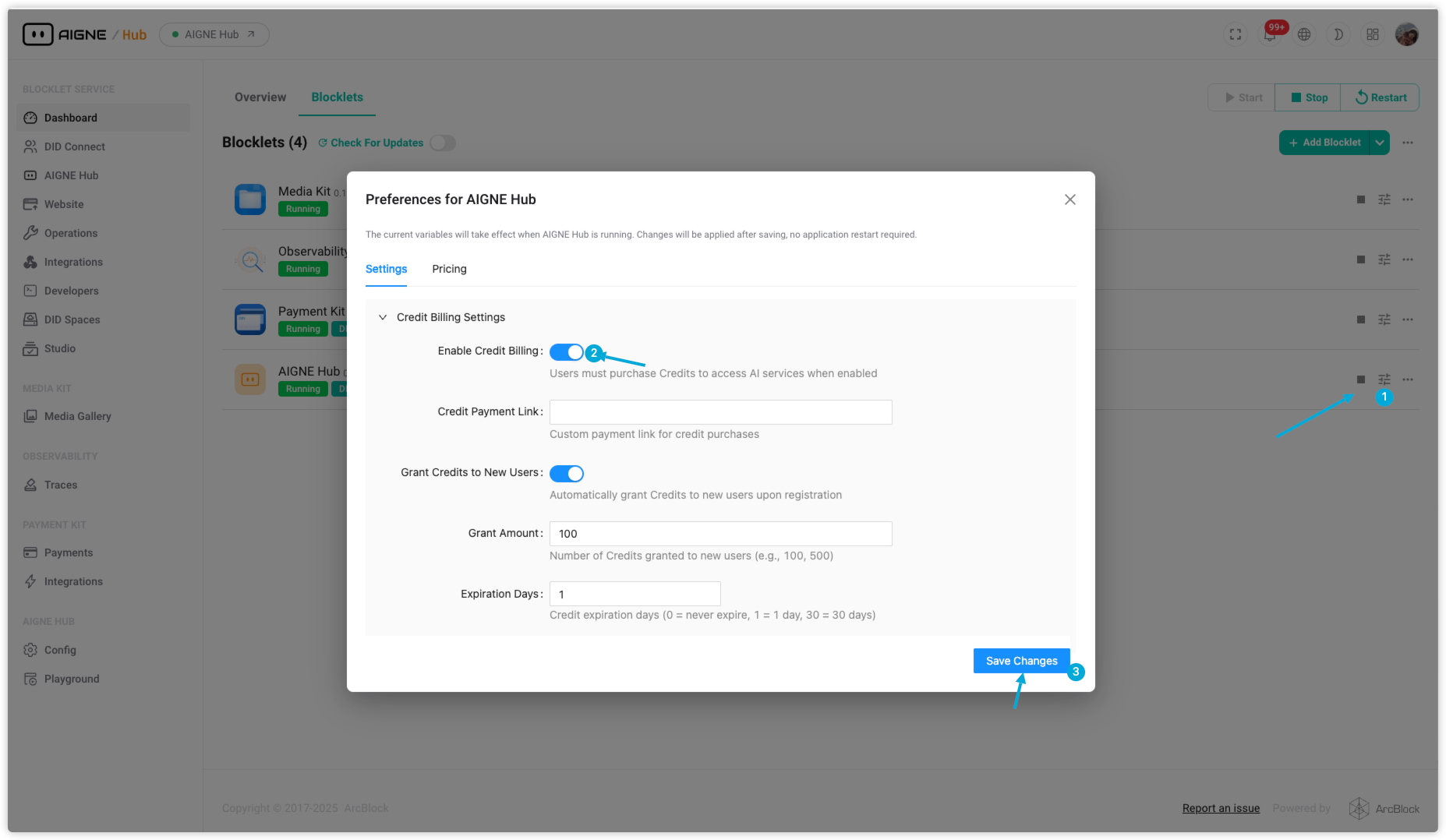

- Enterprise Mode – Enable multi-user capabilities with credit-based billing, where usage is metered per token.

- Install the Payment Kit component.

- Enterprise Mode Setup:

- Install the Payment Kit component.

- Enable Credit Billing in:

AIGNE Hub → Preferences. - Set base credit price, profit margin, and initial credits for new users.

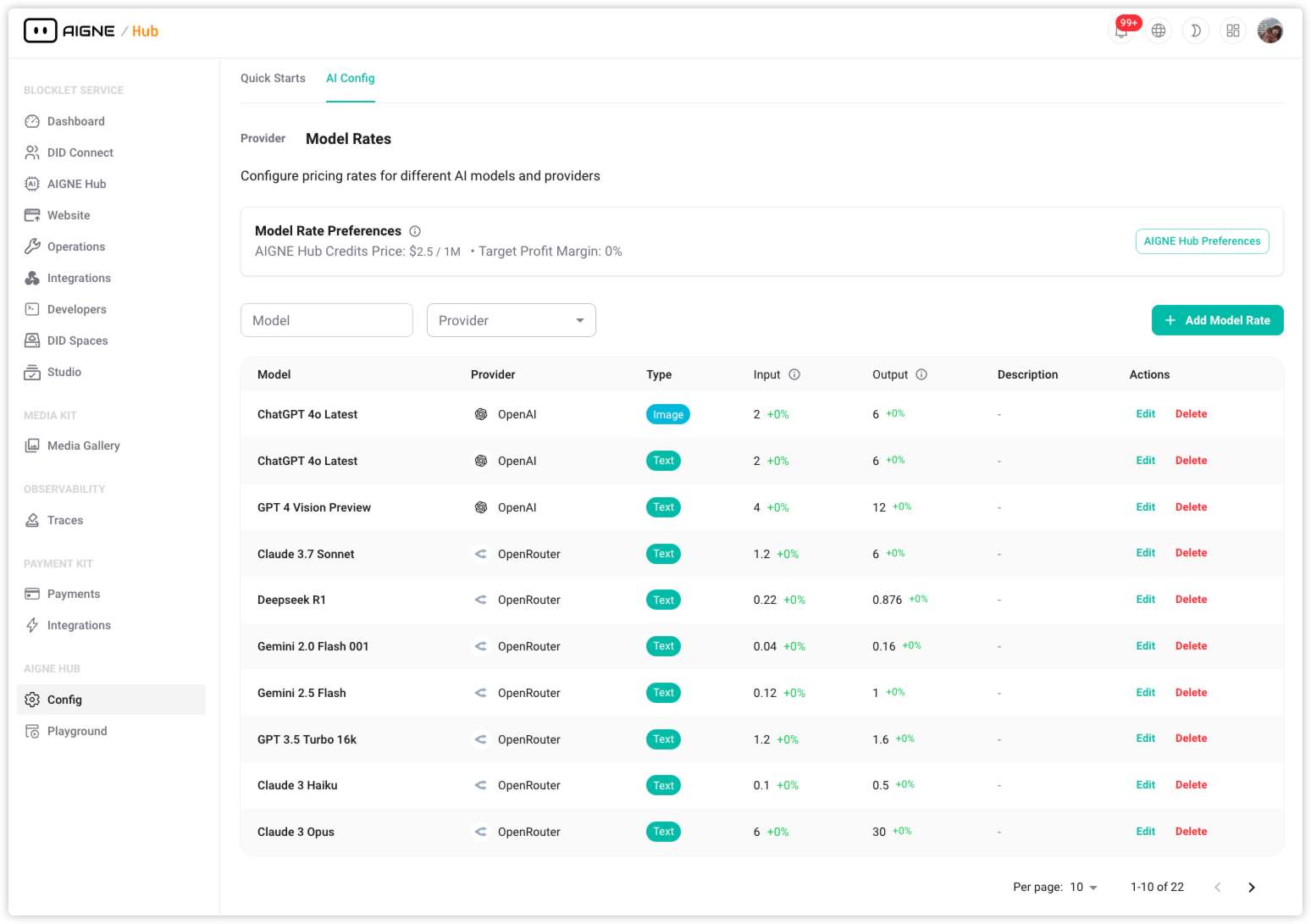

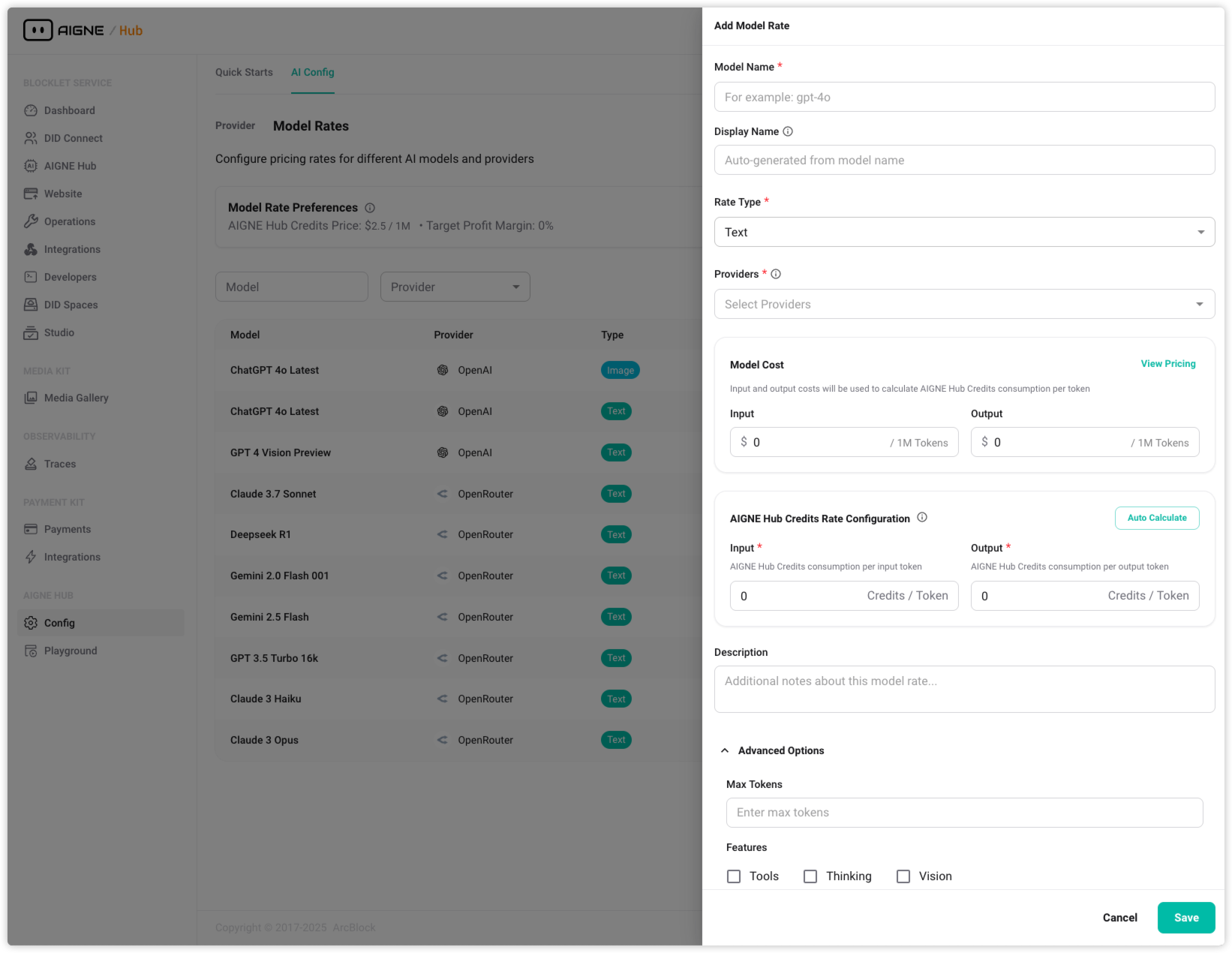

- Configure Model Rates

- Navigate to:

AIGNE Hub → Config → AI Config → Model Rates. - Assign per‑model pricing for each provider.

- Set distinct credit rates for input/output tokens.

- Navigate to:

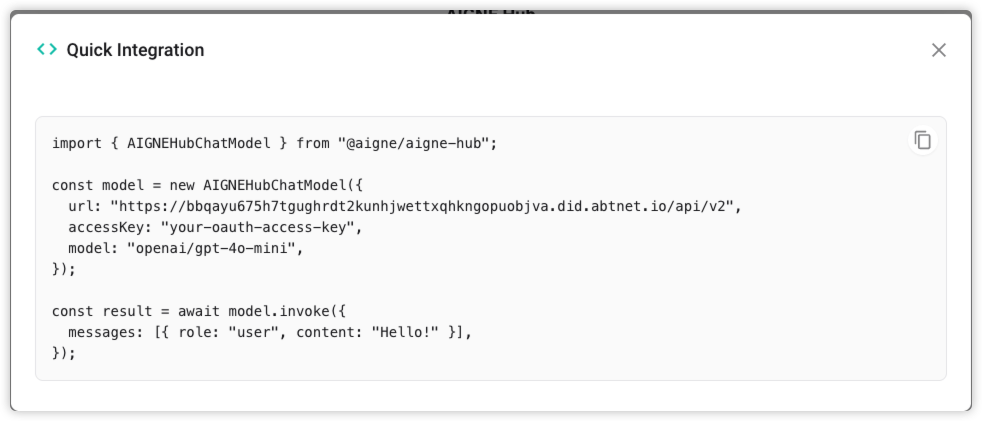

Deep Integration with AIGNE Framework#

- Fully compatible with the AIGNE Framework and its provider adapters for unified model access, permissions, and usage tracking. Developers can use all Hub features via the

@aigne/aigne-hubpackage.

Seamless Compatibility with Blocklet(s)#

- During the Blocklet boot sequence, AIGNE Hub injects runtime LLM configuration as environment variables directly into Blocklet components.

Built in CLI Support#

- Run any connected model directly from your terminal with the

aigne runcommand—no individual API key setup required. - No separate API key setup required. Works in both Personal and Enterprise modes—perfect for rapid testing, demos, or quick queries.s.

Supported AI Providers #

The following LLM providers are supported, including:

Cloud Providers

- Amazon Bedrock (AWS models)

- Google Gemini (text + image)

Model Labs

- OpenAI (GPT, DALL·E, Embedding)

- Anthropic (Claude)

- xAI (Grok series)

- DeepSeek

- Doubao

Multi-Model Platforms

- OpenRouter

- Poe

Local Models

- Ollama

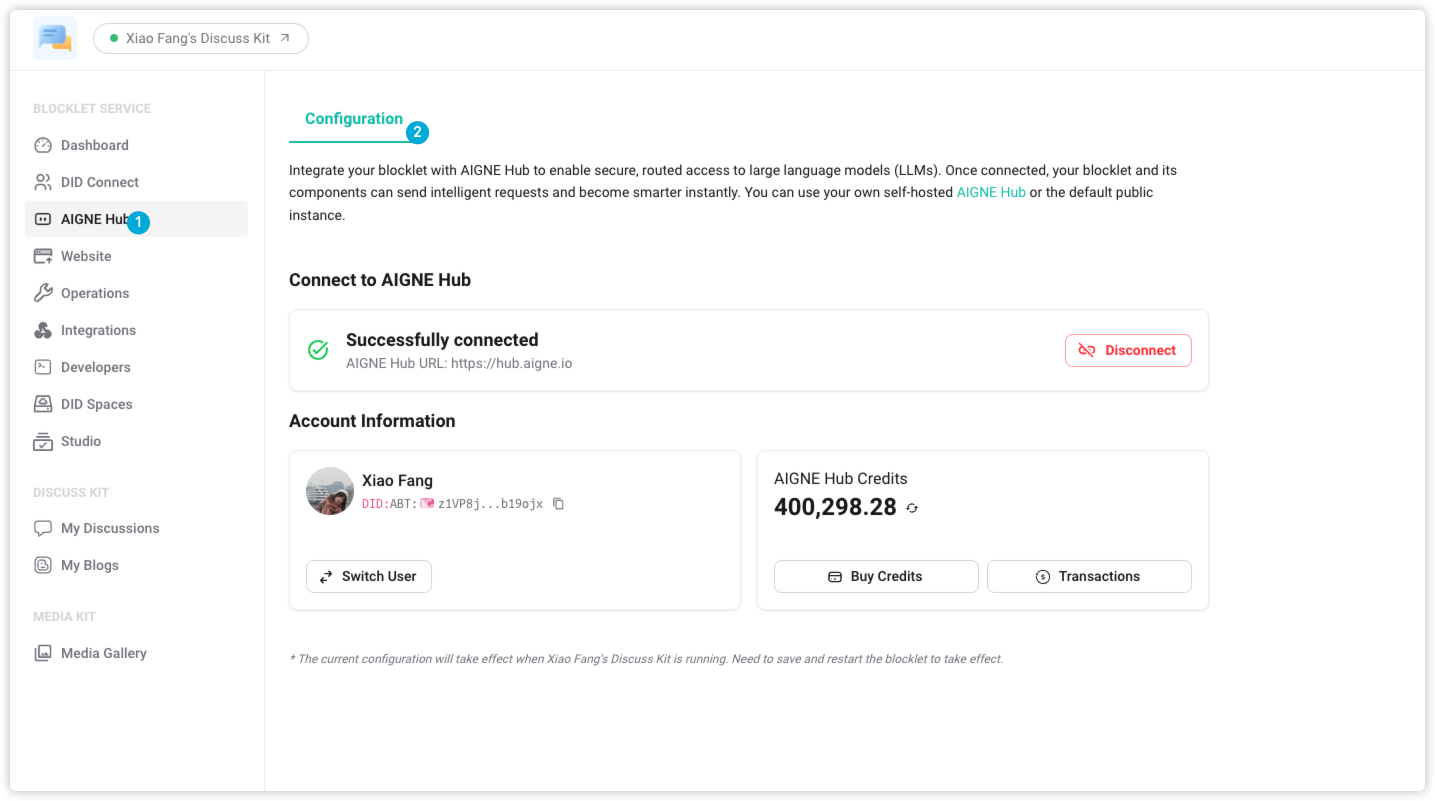

Upgrade from AI Kit’s Subscription Mode#

Coming from AI Kit’s pay‑as‑you‑go model? You can now switch to the credit‑based system:

- No local AI Kit installation required

- Remove the old setup and link your account via:

Services → AIGNE Hub

.

Benefits for New and Existing Users#

To start using AIGNE Hub, all new accounts receive a one‑time credit boost—ideal for exploring features right away.

- Credits are valid for a limited time

- Applicable to all supported providers

- Full details provided upon activation

Resources#

Learn more and get started with AIGNE Hub using these resources:

- 🛍 AIGNE Hub Store Page

- 🏠 AIGNE Hub Official Website

- 📘 AIGNE Hub Github Repo

- 🧑💻 AIGNE CLI Doc

- 💬 Join the Community

With this release, ArcBlock’s AIGNE Hub delivers the flexibility, control, and scalability needed to streamline AI projects and unlock a seamless multi‑provider experience. Your next build starts here.